Principles of Token Validation

Sometimes it’s good to take a little break from just solving the immediate problem at hand by cutting & pasting code found on the ‘net, and take a step back to contemplate the bigger picture and the general principles that make that code tick. Also, the wife DVR’d the Oscars ceremony and she’s about to do some binge watching – it looks like I have some unstructured time to fill and I don’t feel like opening my overflowing inbox this Sunday night.

Hence, welcome to one back-to-basics installment!–If you are a veteran reader of this blog you probably won’t have much use for this, but with the advent of Windows Azure AD I feel there’s a lot of you just ramping up – hopefully this post will help shedding light on one of the most fundamental tasks we perform while handling identity, and why the initialization parameters you have to feed in our software are the way they are.

Note: This is by no mean required reading, you can successfully use Windows Azure AD/ADFS/etc without knowing any of the below, but I found that it helps to grok some of the basics. Up to you!

Why Do We Validate Tokens?

Why do we bother validating tokens, indeed?

When your Web application checks credentials directly, you verify that the username & password being presented corresponds to the ones you kept in your database.

When you use claims-based identity, you outsource that job (and the job of keeping track of your users’ attributes) to an external authority/identity provider. Your responsibility shifts from verifying raw credentials to verifying that your caller did indeed go through your identity provider of choice and successfully authenticated. The identity provider represents successful authentication operations by issuing a token, hence your job now becomes validating that token. More about what that entails in just a moment.

Tokens and Protocols

Let’s take a second to clarify an important point: tokens != protocols.

Tokens are the form that issued credentials take to be transported, from authority to client and from client to resource.

Protocols detail the ceremony that apps use to send users to authenticate to an authority and/or clients use for sending tokens to apps for gaining authenticated access. In other words, protocols are used for moving tokens.

Some protocols have a preference for specific token formats: the SAML protocol only works with SAML tokens, OpenId Connect calls out JWT tokens specifically. Others can work with multiple tokens, provided that there’s some kind of binding: WS-Federation usually works with SAML, but can work with JWTs and pretty much anything that gets wrapped in a binary token. OAuth2 doesn’t mention any token format at all, given that it is mostly concerned with teaching to apps how to be clients (and clients should not care about the format of the tokens they handle, that’s a matter between the authority and the resource).

Mechanics of Token Validation

Alrighty, what that that really mean to validate a token? It boils down to three things, really:

- Verify that it is well-formed

- Verify that it is coming from the intended authority

- Verify that it is meant for the current application

Let’s examine all those in order.

Well-formed

If we would all be Econs, using language with perfect property at all times, we would probably call this phase “validation”. However we usually lump in that term all checks, including the ones described later, hence I am forced to find a circumlocution like “verifying that a token id well-formed”.

When can you say that a token is well-formed? If the following holds:

A. The token is correctly formatted according to its intended format

B. The token has been received within its validity period, as validity is defined by the token’s format

C. The token has not been tampered with

The meaning of A. is mostly that the token implements well the format specification it is meant to use. This is not as much as a security requirement, as it is a practical one: you’ll have parsing logic meant to work with SAML1.0, SAML1.1, SAML2.0, JWT or whatever other format you support – and you don’t want that logic to fail or to deal with ambiguous elements.

About B: it’s a good idea for tokens to have a limited validity, as that gives the opportunity to re-assess the validity of the authority’s assertions about the user often – without reasonably short validity periods, there would be no way of performing revocation.

Every token format defines some mechanism for expressing validity intervals: SAML has NotBefore and NotAfter clauses, JWT has ExpiresIn, and similar. Verifying those is just a matter of parsing the values and comparing those with the local time of the authority, modulo any clock skew if known.

Verifying that a token has not been tampered with is more complicated to explain, but it’s really not that complicated in itself.

All the token formats I listed establish that a token must be digitally signed by the authority that issues it. A digital signature is an operation which combines the data you want to protect and another will known piece of data, called a key. That combination generates a third piece of data, called the signature. The signature is typically sent with the token, accompanied by information about how the signing operation took place (which key was used, what specific algorithm was used).

An application receiving the token can perform the same operation, provided that it has access to the proper key: it can then compare the result with the signature it received – and if it comes out different, that means that something changed the token after issuance, invalidating it.

Given their importance I must make one special mention for one special class of keys, X509 certificates. Without getting in the details, let’s say that certificates rely on a technology (public key cypto) that used 2 different keys, one for signing (which remains private: only the authority has it) and once for verifying the signature (which is public, known by everyone and distributed via certificates). In order to verify a signature places by a private key, an app receiving the token needs to have access to the certificate containing the corresponding public key.

Those three tasks establish if a token “looks good” regardless of the context in which it has been issued and sent – if it is well formed. The next checks enter in the merit of the specific issuer and app being accessed.

Coming from the Intended Authority

You outsource authentication to a given authority because you trust it – but as you do so, it becomes critical to be able to verify that authentication did take place with your authority of choice and no else.

Tokens are designed to advertise their origin as clearly and unambiguously as possible. There are two main mechanisms used here, often used together.

- Signature verification. The key used to sign the issued token is uniquely associated to the issuing authority, hence a token signed with a key you know is associated to a certain authority gives you mathematical certainty (modulo stolen keys) that the token originated from that authority.

- Issuer value. Every authority is characterized by a unique identifier, typically assigned as part of the representation of that authority within the protocol though which the token has been requested and received. That is often a URI. Different token formats will typically carry that information in a specific place, like a particular claim type, that the validation logic will parse and compare with the expected value

In classic claims-based identity, every authority has both its own key and its own identifier. In scenarios including identity as a service, however, that might not be the case. For example: in Windows Azure Active Directory the token issuing infrastructure is shared across multiple tenants, each representing a distinct business entity. The signature of issued tokens will be performed with the Windows Azure AD key, common to all, hence the main differentiation between tenant will be reflected by the different issuer identifier found in the token. To be more concrete: a Contoso employee accessing a Contoso line of business app secured by Windows Azure AD will send a token signed by the same key used by a Fabrikam employee accessing a Fabrikam line of business app – but in the first case the token will have an Issuer value corresponding to the Contoso’s tenant ID, whereas the latter will have the Fabrikam tenant ID. If the Contoso employee would attempt to access the Fabrikam app by sending his token, he’d fail because the validation logic in from of Fabrikam’s app would find in the incoming token the Contoso’s tenant ID instead of the expected Fabrikam’s tenant ID.

Both signature verification key and issuer ID value are often available as part of some advertising mechanism supported by the authority, such as metadata & discovery documents. In practice, that means that you often don’t need to specify both values – as long as your validation software know how to get to the metadata of your authority, it will have access to the key and issuer ID values.

Intended for the Current Application

Tokens usually contain a claim meant to indicate the target app they have been issued for. As for the issuer, the identifier used to indicate the app is typically the representation of the app within the protocol used for obtaining the token.

That part of the token is often referred to as the audience of the token. Its purpose is the same as the corresponding field in a bank check: ensuring that only the intended beneficiary can take advantage of it. An application receiving a token should always verify that its audience corresponds to the app’s audience: any discrepancy should be considered an attack.

Imagine that Contoso is using two different applications, A and B, from two different SaaS providers. Say that one employee obtains a token for A and sends it over. A could take that token and use it to access B, pretending to be the employee: the authority is what B expects, the token has not been tampered with, it is still within its validity period… however, as soon as B verifies the audience, it will discover that the token was originally meant for A and not B itself – making the token invalid for accessing B and averting the token forwarding attack.

This check has an interesting property: whereas issuer value and signing keys are often discoverable from the authority via some specific mechanism (metatada documents, for example), the audience value must always be specified on a per-app basis. In other words, typically the only source of truth for what audience value should be considered valid for a particular resource is the resource itself – the developer includes that value as part of the resource configuration.

Other Checks

The checks described are the indispensible verifications one must perform to assess the validity of an incoming token. That is just the beginning, of course: knowing that the caller is actually Bob from Contoso does not mean that he has access to the resource he is requesting – rather, this gives you the information you need for performing your subsequent authorization checks if necessary for the business logic your app implements.

Specific resources and app types might consider some subsequent checks so important that they should really be part of the token validation proper – examples include the value in the scope claim for OAuth2 bearer flows. Many middlewares offer extensibility points you can use for injecting your own validation logic in the pipeline; but it is also common to add the extra checks directly in the app, in constructs typical of the stack used (e.g. filters & attributes in MVC) or even in the first few lines of a method call.

In the end all it matters is that the logic is executed before access is granted, which in practice usually means before the interesting app code gets to execute. As long as you are satisfied that this happens, you can choose to draw the logical line that groups the “token validation logic” wherever you deem it most appropriate.

An Example

A bit abstract, but hopefully not too abstract ![]()

Here, let’s illustrate the concepts with one example.

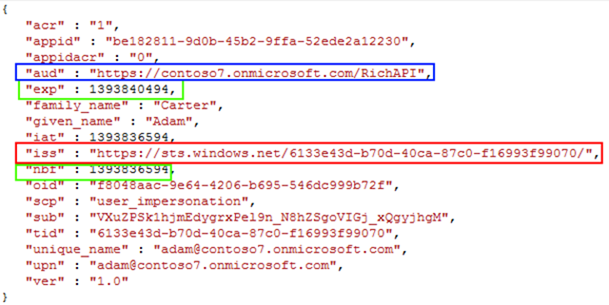

Say that my resource is a Web API, protected by Windows Azure AD and specifically by the Contoso7 tenant. Say that we want to use JWT.

In order to be considered valid, an incoming JWTs will have to comply with the following:

- Be signed by the certificate MIIDPjCCAiqgAwIBAgIQs[..SNIP.]WLAIarZ

- Have the value https://sts.windows.net/6133e43d-b70d-40ca-87c0-f16993f99070/ in the iss (issuer) claim

- Have the value https://contoso7.onmicrosoft.com/RichAPI in the aud (audience) claim

…and of course it will have to be still valid, not having been tampered with, and so on.

The first 2 values came straight from the Contoso7 metadata document. The audience corresponds to the ID I have chosen to assign to my API when I provisioned it in my directory.

Let’s take a look at a token satisfying the above.

You can see highlighted in red the issuer value, in blue the audience, and in green the relevant claims used to assess whether the token is expired. See? It’s really not magic!

That is not the token in its entirety, but only the “body”. JWTs typically have

- a header (not shown) detailing the algorithm used for signing an a reference to the key used

- a payload carrying the claims

- The signature value (not shown)

Each part is base64’ed, hence you would not see the above in Fiddler.

Currently we offer various ways for you to inject validation logic in front of your application. You can choose to work directly with the JWT token handler class, as shown here, and configure it to use the above values as validation parameters. You can choose to use the OWIN middleware, as shown here, which makes your job much easier but gives less control. You might even go completely manual and re-implement the JWT parsing & validating logic yourself, though that would be more work than you’d likely want to do given that ready components are available. The key point is, ultimately all those techniques will perform the checks described above.

Wrapup

Well, I got to the end of the post and to my chagrin Sandra Bullock did not get an Oscar… and Her got far less recognition it would have deserved IMO.

On the other hand, now hopefully you have a deeper understanding of why we configure our validation middleware they way we do… hence I didn’t completely waste the evening! ![]()

The above contains a lot of simplifications, and does not really give any details about another crucial component which requires configuration, the protocol parts – however it provides me with a base I can use to explain more complicated scenarios (like this one – which is going to be so much easier with the new Owin components).

Let me know if you think that those “back to basics” posts are useful – if they are, I’ll make sure to keep them coming!

Very well described. Thank you so much for this back to basic post, I really needed something like this to give me the basic understanding that I’ve been lacking. It is a great help!